Digital Twins In Healthcare

History, Current Applications, Future Applications, Dating Applications, and Hurdles

Hang the DJ is one of the best episodes on the critically acclaimed television series, Black Mirror. This episode features a dating simulation in which a device named "Coach" pairs partners together for a randomized period of time. Each person in the simulation goes through a series of potential matches until their "pairing day," a day when each participant pairs with their ultimate romantic match. But the pairing day is a guise; in actuality, the system tests whether you and your partner would deny your app-assigned "perfect match" (which actually isn't all that perfect) and rebel against the simulation to be with one another. This coordinated rebellion is recognized as compatibility, and out of 1000 simulations, the main characters rebelled against the system 998 times. This simulation then assigned a 99.8% compatibility tag to the main characters' real-life pairs through the dating app; then, they finally met in real life.

The Dating Digital Twin

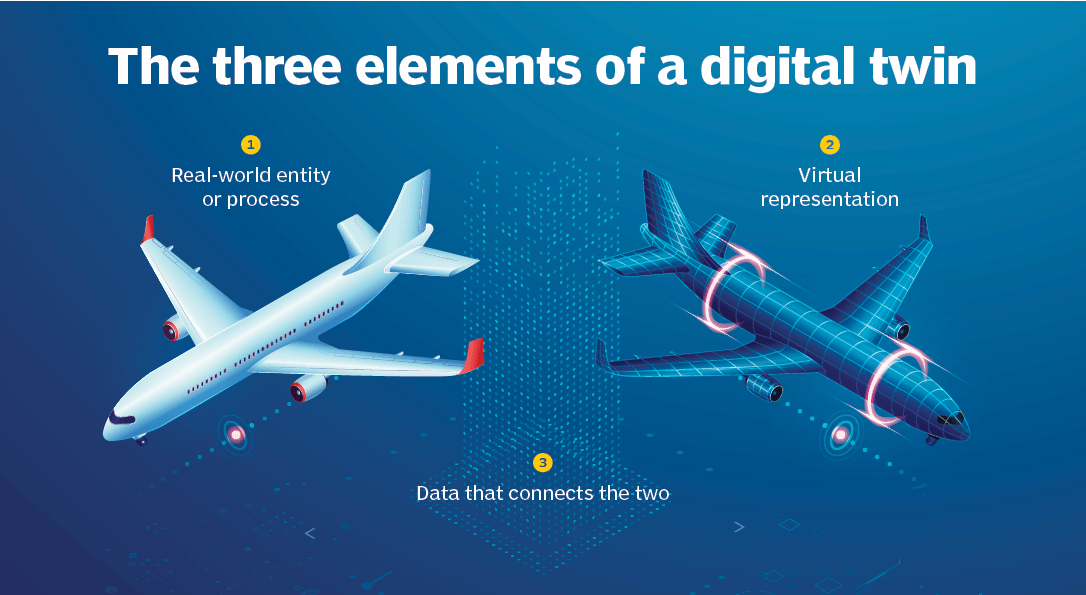

Imagine if a dating app could help us circumnavigate doom-scrolling through suitors and dry-laughing at pickup lines. How would we make this possible? We would first need a dating digital twin: a digital entity that is a holistic representation of ourselves, one that shares our mental and emotional disposition, and one that has an intimate understanding of not only our desires but also our red flags and icks. We would also need this digital twin to be mapped to our physical selves in real time to capture the dynamics of our rapidly changing romantic taste. With these three components: a physical entity, a digital entity, and a real-time connection between the two, we now have a digital twin for dating that is identical to the simulation in Hang the DJ.

Digital Twintroduction in Healthcare

Now how could our dating Digital Twin (DT) be applied to other more useful, real-life situations? A cybercopy, the predecessor of the DT, is a virtual model of a physical system, whether a process, facility, or infrastructure, that isn't informed by live data. Cybercopies aren't novel; in fact, they have been explored and applied in the aerospace and engineering fields for over half of a century. Cybercopies have historically simulated systems like spaceships and automobiles to forecast adverse events and conduct testing in silico. Imagine being able to perform a vehicle crash test without physically destroying any cars or being able to run a virtual flight simulation to debug potential issues before actually launching a spacecraft.

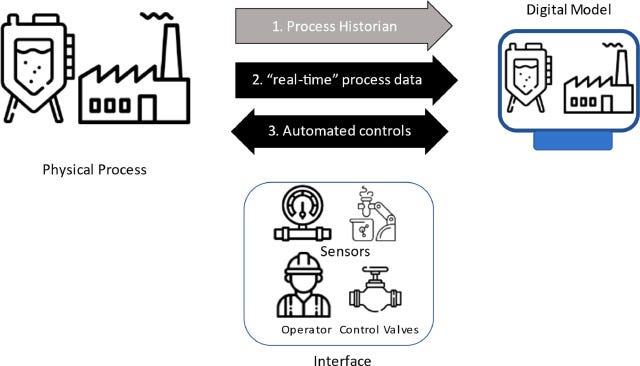

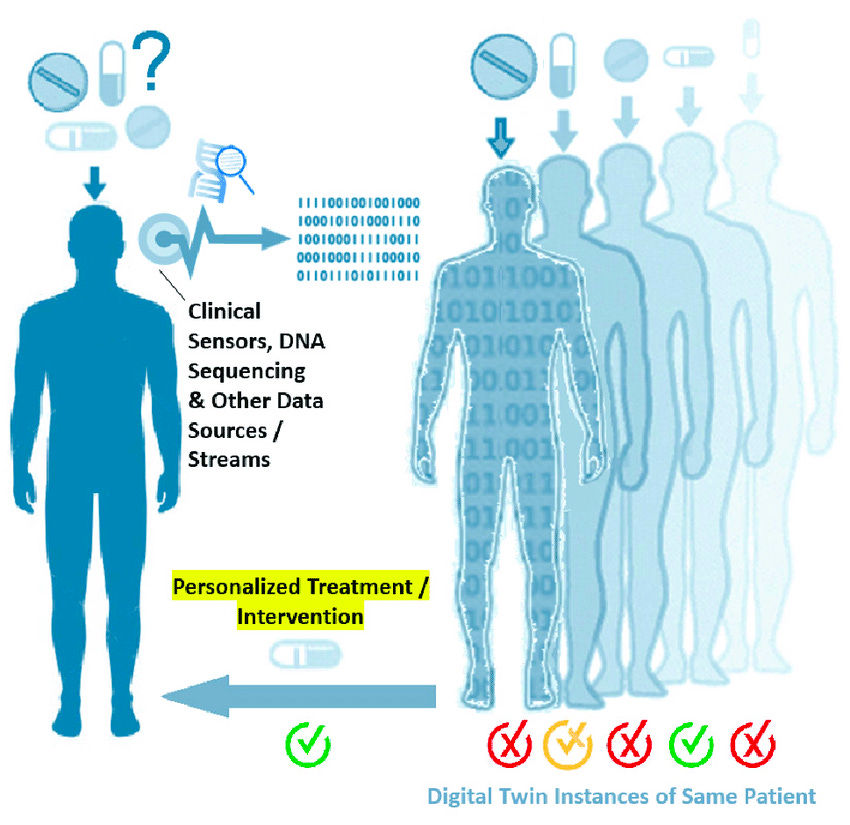

Cybercopies have evolved in complexity as their models have become more precise and their applications have become more broad. Most cybercopies are engineered from real-world data (RWD) at a singular point in time to forecast process outcomes. However, fitting cybercopy models to extrapolate individualized outcomes, especially in high-precision industries like healthcare, may not suffice; precision is vital. A cybercopy might be helpful for simulating the sneaker oxidation process of my Fire Red Jordan 5 based on the sneaker condition, storage location, and year manufactured (an application I would gladly pay for). However, a cybercopy might not be appropriate for simulating tumor progression in a patient without incorporating individualized omic or biological data. That individualized, real-time data turns a cybercopy into an actual DT. The real-time data connection can either travel from the physical process to the digital process (one-directional), or the physical process can relay data to the digital one, and the digital entity, in turn, can provide intelligent inputs to operate the physical process more efficiently (bidirectional).

Most advanced DTs utilize a bidirectional, real-time data connection for individualized outcome prediction, while the more rudimentary models leverage a real-time, one-directional connection to provide process analytics and insights. As you can imagine, these DTs have enormous applications and have recently made their way into healthcare. While these DTs are growing in complexity, many human health applications remain untwinned due to the complexity of modeling biological systems and human-food/drug interactions in vivo. Not only will it be challenging to develop a robust human DT, but the amount of compute and data storage also remains a bottleneck in bringing holistic human models to fruition. Where we have succeeded is in being able to produce healthcare DT models for large-scale and localized environments.

Large-scale Healthcare DTs

Preventative management and maintenance in healthcare facilities have traditionally been elusive but can be better implemented with DT technology to improve operational efficiency and care coordination across the healthcare facilities space. GE Health System's Care Command is a hospital DT that digitizes the patient journey within the hospital care unit and simulates workflow and capacity. Care Command receives a live data stream of patient information, like aggregated patient demand, patient supply, and planned discharge data. It then leverages AI to coordinate care and efficiently allocate resources throughout their health system. During COVID-19, Oregon leveraged Command Care's model to track bed and ventilator capacity across the state. Oregon was able to maximize the utilization of these bed and ventilator resources and increase bed and ventilator visibility statewide. The Mater Hospital in Dublin also similarly leverages DT technology to evaluate the operations of its radiology department. After implementing their DT, the hospital saw a decrease in patient wait times and patient turnaround times for imaging.

Pharmaceutical manufacturing is another large-scale process made more efficient by leveraging DT technology. Pharma manufacturing is a field in which modeling simulations are commonplace, but DTs are unique because they operate in parallel with the physical system and, therefore, can simulate many biological and chemical processes simultaneously. DTs in pharma manufacturing featuring one-directional connections are mainly used for process monitoring, while DTs with bidirectional connections operate more like a closed-loop control system in which the DT can manipulate inputs for desired outputs. Most pharma manufacturing companies that use DTs leverage a bidirectional connection, which can help apply Quality by Design (QbD) to their process by reducing variability and offering risk mitigation solutions. Implementing a bidirectional connection will, in turn, help manufacturers reduce the amount of Process Performance Qualification runs they need for process validation.

A few companies have already digitally twinned their manufacturing process to more accurately forecast Critical Quality Attributes and Key Performance Indicators based on a set of inputs and Critical Performance Parameters. Healthcare has gotten increasingly better at simulating biological and chemical processes in vitro, but another limiting reagent is the connection quality between the virtual and the physical process. Additional process monitors and equipment validation can be costly, and it might be challenging to justify the overhead costs in the short term, but with increasing adoption, we hope to see the price of DT hardware decrease. We've seen Sanofi and GSK explore using DTs in their vaccine manufacturing process. Both companies leverage machine learning to gain advanced control capabilities and reduce process risks.

Localized Healthcare DTs

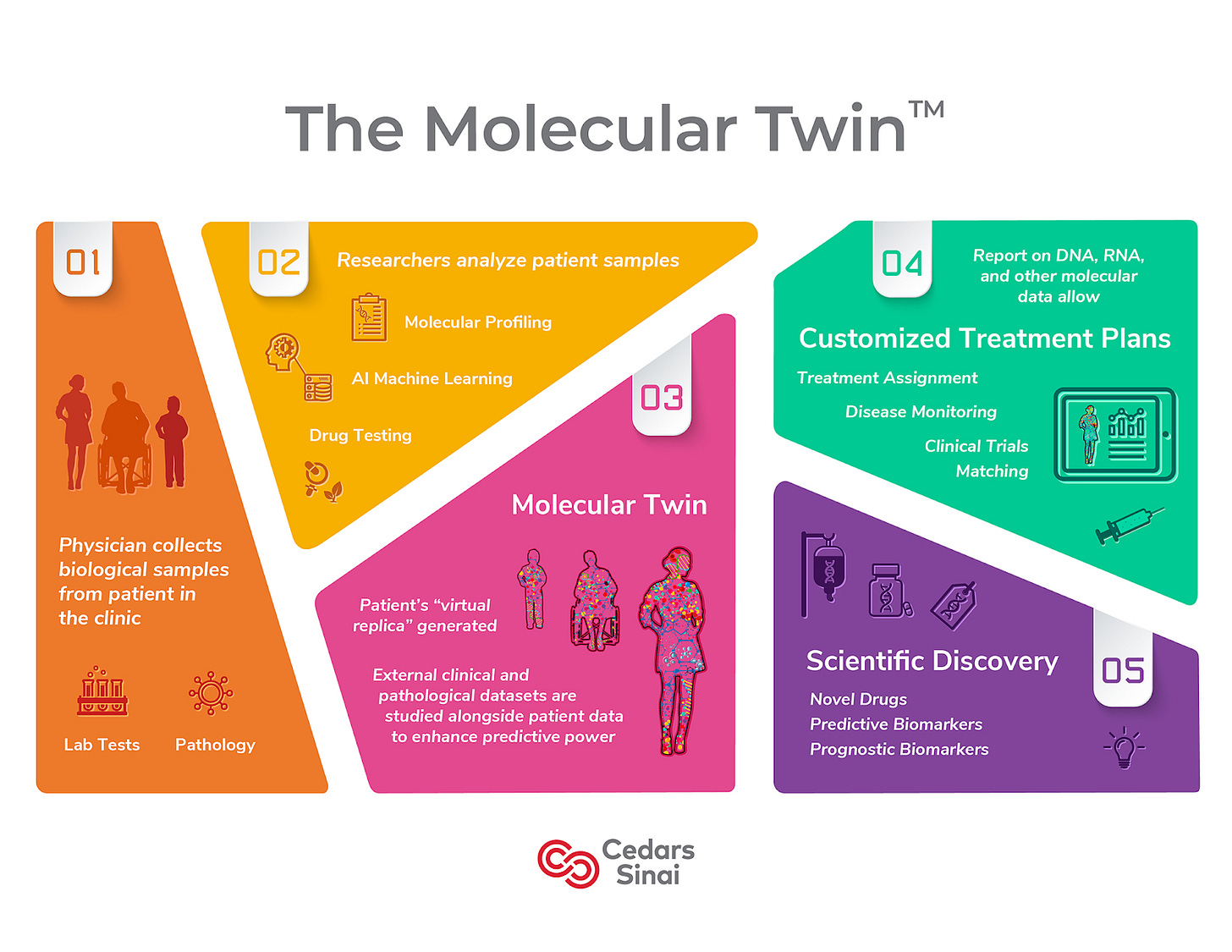

DTs have also been helpful in modeling and helping simulate localized processes, mainly pertaining to pathogenesis and organ and skeletal function. Utilizing these DTs allows companies to glean insights on medical device design, optimization, and disease progression. Most companies we see in this space are leveraging their DTs for planning or diagnostic purposes. Researchers at Cedars-Sinai Medical Center have created a digital twin of the pancreas that accurately predicts clinical outcomes of patients with pancreatic ductal adenocarcinoma (PDAC). Their model is a virtual computational twin that is updated with new analyte types via live data connection. Through their research, The Sinai Medical Center was able to predict disease survival for PDAC with a singular omic entity, which is protein plasma. Cedars-Sinai is also developing The Molecular Twin which is precision medicine application employing DTs to help combat oncology. A team at Johns Hopkins is employing DTs to aid with surgical planning for patients with arrhythmia. Using clinical imaging and a computational heart model, this team models precisely where to place each ablation to combat the disease properly. Organ digital twins are becoming widely adopted in healthcare and will likely move toward wide stream adoption before the next decade.

What Digital Twins Could Mean for Healthcare

The DT healthcare market was valued at $1.17B in 2022 and is expected to grow to around $39B by 2032 at a 42.2% CAGR. In this early adoption stage of healthcare DTs, we've seen these digital entities be able to forecast and simulate across a variety of platforms. As adoption increases, I expect to see more computationally complex and individualized models. Below, I'll highlight some areas where I see potential for DT-catalyzed healthcare disruption in the upcoming years.

Clinical Trials

Unlearn.AI is one of the companies leading the charge in clinical trial execution with DTs. Their model creates a digital twin for each trial participant to produce a DT forecasted prognosis; they then unbias that forecast and compare it to the clinical prognosis to produce an unbiased forecast-adjusted prognosis. This unbiased forecasted adjusted prognosis is used to measure treatment effect and ultimately allows for a reduction of patient size in the clinical study's control group. DTs will eventually be able to push the needle even further and produce a forecasted treatment outcome, which would ultimately contribute to reducing the number of patients in the treatment group of Phase 2 & Phase 3 studies. Safety is the low-hanging fruit; if we can ensure safety through dose escalation by first testing in silico, this would be an exceptional outcome for DTs. The next logical step is efficacy testing in silico, allowing us to improve the patient selection process and push the most promising clinical agents to Phase 3.

I see considerable value in companies building foundational disease models and models to simulate drug-disease and human-drug interactions. Ensuring that the data ingested in these models are diverse and robust is extremely important, as personalized digital twins for each patient will likely be extremely costly and unviable for all players in the space. In the short term, I envision companies will engineer cybercopies segmented by omic profiles and create patient outcome probability models for patients to have an idea of their potential response to molecular compounds based on their omic profile. These models will likely be able to simulate the compound’s effects on a cellular level and simulate the effects of compounds on the clinical endpoints of a patient. DTs' computational architecture will likely be derived from these disease and human-drug interaction models and will enable companies to accelerate the hit-to-lead process and gain significant efficiencies in the drug development process. The Korea Advanced Institute of Science and Technology is already using deep learning models to help predict patients' drug-food interactions and unknown drug-drug interactions (DDI) in patients who are co-prescribed medicine. Angström Ai is leveraging Gen AI to run a quantum-mechanic model to simulate molecular interactions that can be used to forecast drug safety and efficacy. Cytoreason is also making headway on modeling in clinic, as they have built a model which can simulate disease progression on a cellular level. When overlayed with individual omic data and a therapeutic engine, this model can help improve patient selection and help generate potential inclusion/exclusion criteria in the protocol. If we can forecast these interactions in silico, we can significantly shrink the size of our clinical studies and clinical development timelines.

Metabolism & Full-Body Twins

Twin Health is one of the companies at the forefront of developing the full-bodied digital twin. Their DT helps combat metabolic dysfunction among diabetic patients, but several use cases remain untapped in this medical realm. We're slowly starting to see a change in the general sentiment on how we view obesity, with more viewing obesity as a disease needing medical intervention as opposed to a culmination of poor lifestyle choices. I anticipate that in the near future, more payers and government-sponsored health plans will begin to subsidize weight loss drugs, which will lead to the mass adoption of these drugs, specifically ones targeting the GLP1 receptor. As we get better at simulating and forecasting biological processes, we will better understand human-drug and human-food interactions, with the latter allowing us to construct DTs capable of predicting health outcomes based on lifestyle and dietary decisions.

Similarly to bioprocessing, the data connection quality will play a role in the utility of the DT. We've yet to see wearable hardware that can efficiently and autonomously capture data points like protein and caloric intake as well as psychological changes like stress (although we can approximate manually). The more multimodal data we are able to collect through live-data connections, the more use cases a model like this will have. Metabolism is an early use case, but I see this expanding to include injury prevention and rehabilitation, mental health and wellness, and many more applications. As we progress further into adoption, I also imagine the physical person will interface more with their DTs, with these twins being used as a preventive health tool.

Digital Twin Hurdles

I would be remiss to exclude the hurdles on DTs' road to mass adoption. Regulatory acceptance of in silico testing being included in regulatory submissions will dictate the amount of resources allocated to DTs in the Pharma space. The FDA has published guidance and frameworks on leveraging computational modeling and simulation (CM&S) for regulatory submission of medical devices, but only for physics-based models. The FDA does seem to be open to having dialogues with companies leveraging ML-native computational models, but they haven't explicitly offered guidance on their use in drug discovery and drug development; I anticipate that they will release guidance on CM&S in Pharma and for ML-native computational models in the near future.

Data infrastructure and computing power are potential issues I spoke about briefly that could also hinder the adoption of DTs. The models that these DTs are built on are increasing in complexity; these models will likely be able to simulate biological processes in vivo and forecast human-molecule interaction. The amount of compute required to run these models consistently will likely require cloud infrastructure or increased server GPUs. Pharma/Payer/Provider (PPP) must ramp up compute capabilities to house these models on their databases. As these DTs increase in modeling accuracy, we will begin to see them become more personalized, and as their adoption increases, system performance must be considered. If DTs are to be regularly used in a preventative manner, ensuring that they live on a high throughput low latency network is extremely important. The output of these models may signal immediate medical intervention, especially in fields like surgical application or emergency medicine, so network efficiency is pivotal. Network infra and computing power should be advanced topics when trying to incorporate DTs across healthcare.

Another potential hurdle to consider in DT mass adoption is data. How can we ensure accurate and timely data collection to ensure these models are genuinely live twins? Who owns the collected data? How can we integrate data privacy and security when building these complex models? How can we also ensure privacy and security when transferring DT data to end users? A number of questions will arise regarding data stewardship and ownership, which can be potential roadblocks to the development of more complex DTs. Researchers and companies must keep these things in mind when building DT solutions.

Conclusion

In the not-so-distant future, I envision the Hang the DJ episode coming to fruition in healthcare. DTs will eventually become a medical standard, where PPPs' first development step will be testing in silico, and patients will have access to and interface with their twins. Instead of dating compatibility, we will have individual target or treatment compatibility. Maybe further down the line, DTs will make their way into ubiquity, and most people will own and interface with their personalized, full-bodied digital twin. If properly implemented and developed, DTs can serve as a pillar of preventative health and help us understand how we interact with food, drugs, exercise, stress, and much more.

References:

The parallels you draw between "Hang the DJ" and the potential of Digital Twins is tough!

One question that comes to mind is: what happens to human intuition and personal choice in a world increasingly shaped by these digital representations? If a DT tells me a certain diet is optimal, or flags a potential health issue before I feel a thing, will I still feel in control of my own body and decisions?

On the flip side, the possibilities for preventative care and early intervention are worth looking forward to. Imagine a world where chronic diseases are caught in their infancy, or treatment plans are personalized down to the cellular level.

Your discussion on the evolution from cybercopies to digital twins, particularly in healthcare, highlights the incredible potential these technologies have to revolutionize patient care and clinical trials. The examples you provided, such as GE Health System's Care Command and the applications in pharmaceutical manufacturing, underscore the tangible benefits of DTs in improving efficiency and outcomes. The progression toward more personalized and real-time models promises a future where healthcare can become more predictive and less reactive.

Commendable job of not just highlighting these challenges but also offering a glimpse into how they might be overcome as technology and infrastructure evolve. The future you envision, where DTs become a standard part of healthcare, is both exciting and promising. However, careful consideration and collaboration across the industry will be essential to navigate the hurdles you've identified.